When it was still in its infancy, there were many people who honestly believed that the internet would make the world a better, more informed place. In 1999, Bill Gates himself - in a clear tip of the hat to Marshall McCluhan - proclaimed: "The Internet is becoming the town square for the global village of tomorrow." Three years later, noted media critic Noam Chomsky stated: "The internet could be a very positive step towards education, organization and participation in a meaningful society."

Somehow, these claims seem both naive and misguided by today's standards. Despite the revolutionary effect it has had on the way we work, play, communicate, and live, the internet has come to be viewed by many with a general air of mistrust. This is due in large part to the proliferation of hate speech, conspiracy theories, misinformation, and pornography that has followed in its wake; not to mention the way it has been seized upon by advertising and business interests for their own sakes.

Hence why - in an attempt to restore accuracy and reliability to mankind's greatest tool - a team of Google engineers has proposed developing a new technology for ranking web pages. In a research paper published by Google in February - which appeared in New Scientist - the team described a means of ranking search results based on their factual accuracy, and not in accordance with their popularity.

Google data centers (one pictured here) provides information to millions of users worldwide. Credit: Google

Currently, web pages are ranked based on how many other pages are linked to them. This, of course, is not a good means for measuring accuracy, since viral hoaxes almost always are linked to simply because they are being talked about. As a result, pages that may be filled with inaccurate information (or outright lies) can turn up at the top of Google searches and be treated as real.

As it states in the paper: "Quality assessment for web sources is of tremendous importance in web search. It has been traditionally evaluated using exogenous signals such as hyperlinks and browsing history. However, such signals mostly capture how popular a webpage is. For example, the gossip websites listed in mostly have high PageRank scores, but would not generally be considered reliable. Conversely, some less popular websites nevertheless have very accurate information."

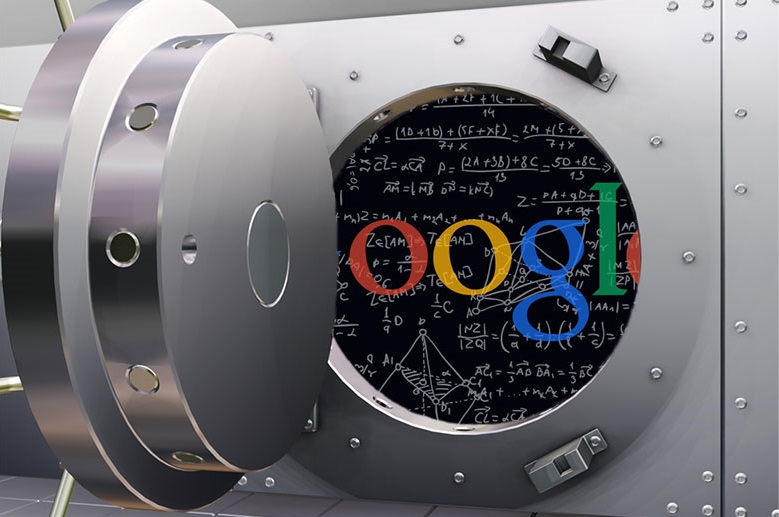

Rather than relying on such exogenous sources, the Google programmers behind the new fact-checking system propose relying on endogenous signals. Basically, this would come down to is using an adapted algorithm to pick out factual statements and compare them with Google’s Knowledge Vault - an extensive database of facts with which Google intends to replace their Knowledge Graph.

Google's Knowledge Vault will serve as a research database to evaluate web pages for accuracy. Credit: motoridiricerca-seo.net

It would also attempt to assess the trustworthiness of the source - for example, a reputable news site versus a newly created Wordpress blog. Another component of the strategy involves looking at “topic relevance", where the algorithm scans the name of the site and its “about” section for information on its goals. From all this, it would generate what they call a “Knowledge-Based Trust” score for every web page.

While this may sound like a bit of a tall order, the basic tools for accomplishing this are already in place. To evaluate a stated fact for accuracy, only two things are necessary: the fact itself and a reference work to compare it to. The Knowledge Graph already does the latter, regularly culling details from services like Freebase, Wikipedia, and the CIA World Factbook to stay up-to-date on current facts. Armed with the Vault as its own internal research database, it will be even easier for Google to compare stated claims to established knowledge.

The paper did not specify when, or even if, such a system would be in place, and remains a theoretical proposal for the time being. And while an algorithm that compares claims made on the internet to a large internal database cannot be guaranteed to sort truth from fiction in all cases - and does open the door to some philosophical questions about the nature of truth vs. fact - it is a very interesting and practical concept.

Numerous methods are being instituted to determine the veracity of internet information. Credit: zayzay.com

Not surprisingly, Google is not alone in wanting to more carefully vet the information it provides to its users. Back in January, Facebook announced that it would be updating its news feed software to flag stories that might be false, and to limit their spread. This option allows users to explicitly flag posts as a "false news story" when they run across something in their feeds they believe is a lie, which Facebook moderators would then assess (much like claims of abuse or spamming is done now).

In addition, there are plenty of apps that try to help internet users sort fact from fiction. For example, there's LazyTruth, a browser extension that skims inboxes to weed out the fake or hoax emails that are regularly circulated. Then there's Emergent, a project from the Tow Center for Digital Journalism at Columbia University, New York, which pulls in rumours from gossip sites, then verifies or rebuts them by cross-referencing to other sources

If there is one thing the internet has been known for, it is the explosive growth it has brought in terms of information sharing. Several decades later, it is clear that concerns over how it is administered and what kinds of content it brings us are leading to appeals for change. In time, it would not be surprising if there were multiple means of reporting fraud and inaccuracies.

And as with all things relating to the internet, it would shocking if people didn't find ways to abuse these as well. If the globe truly is a village, and the internet the town square, then the same rules apply. Caveat emptor, and "don't feed the trolls"!

Top Image Credit: slashgear.com

Sources:

- www.emergent.ca/

- www.lazytruth.com/

- accuracyproject.org/

- arxiv.org/pdf/1502.03519v1.pdf

- www.google.ca/insidesearch/features/search/knowledge.html

- www.mobility-labs.com/2015/fact-checking-googles-fact-checker

- www.theverge.com/2015/2/10/8010673/Google-health-data-knowledge-graph

- www.slate.com/blogs/future_tense/2015/01/20/facebook_hoaxes_news_feed_changes_will_limit_false_news_stories.html

- www.newscientist.com/article/mg22329832.700-googles-factchecking-bots-build-vast-knowledge-bank.html#.VTaEGJNai-d

- www.newscientist.com/article/mg22530102.600-google-wants-to-rank-websites-based-on-facts-not-links.html#.VTF2x5Nai-f

- www.slate.com/blogs/future_tense/2015/03/02/google_researchers_try_search_ranking_based_on_factual_accuracy_instead.html

- www.washingtonpost.com/news/the-intersect/wp/2015/03/02/google-has-developed-a-technology-to-tell-whether-facts-on-the-internet-are-true/