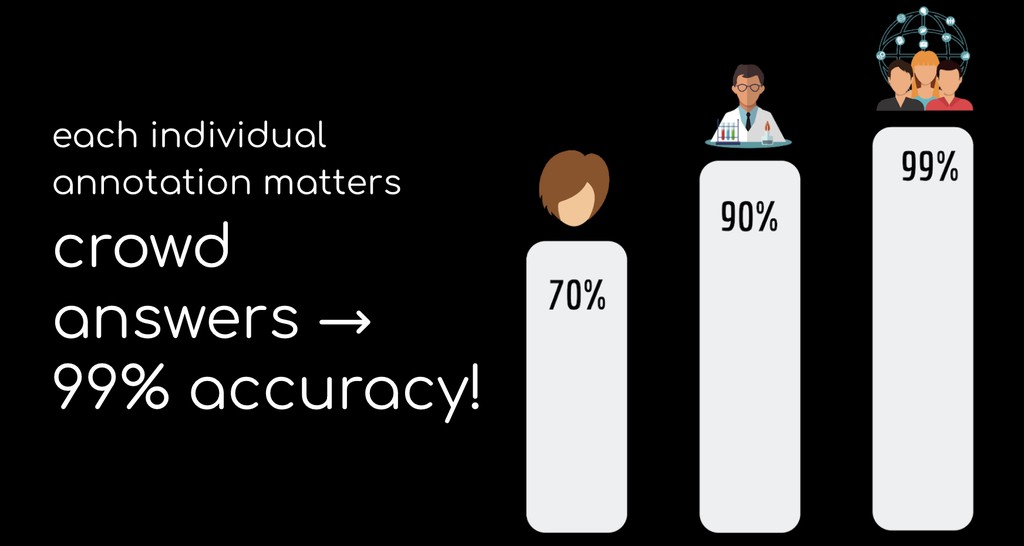

Due to an analytic bottleneck that couldn't be solved using automation, we used citizen science to crowdsource the analysis of Cornell University’s Alzheimer's research data. Having collected 12.4 million crowd-generated annotations we ran a machine learning (ML) competition, resulting in 70 new ML models, which perform well but don’t meet our data quality standards. We investigated a new mode of human/machine collaboration, which allows these models to participate as autonomous agents alongside humans in our crowdsourcing systems. This “CrowdBot” approach speeds up data analysis, reduces reliance on human annotators, and improves data quality, while providing open source tools that reduce barriers for using ML in biomedical data analysis.

Spanning continents, identities, and cognitive styles, HCI manifests all manner of diversity and inclusivity. Each member of HCI brings unconventional thinking, creativity, and the shared goal of creating scalable approaches to helping others. At our heart we run citizen science/crowdsourcing projects. To produce actionable scientific data we develop specialized algorithms that achieve expertlike analysis from thousands of untrained human volunteers. Laura Onac, a key contributor to the CrowdBots project, was invited to join HCI when we realized that her winning model in our ML competition was also the subject of her master’s thesis. Laura has worked closely with director Pietro Michelucci to validate the CrowdBot idea, which is to convert imperfect ML models into artificially intelligent agent-based players in our Stall Catchers citizen science game to speed up and correct for errors in human analysis. Our data sharing agreement gives players access to datasets they’ve analyzed and credits their contributions as coauthors in resultant publications. Our outreach team collaborates with the biomedical research team to create a narrative about the insights discovered in the crowdsourcing process and report these findings on our blog

For eight years the Human Computation Institute has been applying crowdsourcing techniques to analytic bottlenecks. The CrowdBots approach is our newest innovation, with the goal of extending the value of smaller biomedical imagery datasets by reducing the training requirements typically associated with machine learning approaches to automated analysis. Currently, deriving research-grade classification labels for certain features from multi-photon excitation microscopy (2PEF) or whole slide images (WSI) entails data quality requirements that exceed today’s best automated methods. This leads biomedical researchers to discard ML models trained on small datasets when automation falls short of target levels, such as 95% sensitivity and specificity. We are addressing this by validating and deploying CrowdBots, automated ML agents that participate alongside humans in a crowdsourcing environment.

What’s compelling about our approach is we’ve created the bespoke digital platform for receiving and processing biomedical research data from Cornell Researchers and presenting it in a crowdsourcing game environment for annotation by humans and now with CrowdBots informed by existing ML models. This pipeline enables biomedical researchers to pass off their data to us and place it into a crowdsourced game environment which now contains automated CrowdBot agents that boost analytic throughput without compromising data quality. This approach adds further value to the original biomedical dataset because the newly generated labels can be used to further improve CrowdBot performance, contributing to a virtuous cycle of data aggregation, reuse, and improved model performance, which could lead to fully automation.

Based on our CrowdBot and Stall Catchers experience, we encourage biomedical researchers to train models using their existing datasets and make them available in a digital environment for reuse (with their given licensure requirements). Then, citizen science project leaders could select from these models and build projects on top of the existing small datasets, furthering the lifecycle of the dataset while simultaneously opening the analytical playing field beyond a handful of niche experts on the topic. This process could engender collaborative knowledge engineering - the careful semi-automated workflows that preserve data quality while increasing the number of human minds working on an analytical problem.

Using data management best practices from citizen science associations helps us uphold our responsibilities to project volunteers while simultaneously meeting the high data accuracy needs of machine learning models in biomedical research. Resources for these data standards and processes can be found on the Citizen Science Association websites. We collaborated with DrivenData, an organization with a rigorous competition process that prevents competitors from gaming the system. Thanks to the combination of our technologies (pre-processing, crowd aggregation methods, post-processing) with DrivenData’s ML competition standards and processes, it was possible to convert the winning ML models into effective CrowdBot agents in our game. All our source code for the Crowdbots project can be found on GitHub and DrivenData’s Competition homepage. This enables others to replicate the process.

We cannot overstate how important it is for biomedical researchers to share and document their data and models, but they don’t have access to a place that allows for this. In our broader initiative, Civium, we want to promote open science by making it easy to share artifacts transparently, and give creators the opportunity to specify their own licensing terms whether or not financial remuneration is involved. Researchers are cognizant of the hardships associated with sustainable funding while sharing values of Open Source/Science. This incongruity between value-driven behavior and sustainability may stem from the myth that open source = free, when in fact the value is in the source code (and data) transparency. In Civium we provide a valuation and credit assignment that keeps track of what is getting utilized and how much. Any researcher who posts their digital artifact could determine how they get credited and remunerated. This could apply not only to widgets but online services (e/g/ access to a large community of crowdsourcing participants), and could be measured in many ways.