Since you will likely have multiple judges evaluating your submissions, one way to help reduce variation in judging is to standardize each judge's scores.

Every judge will score submissions slightly differently. For instance, one judge may consider 75/100 to be a great score while another judge may give a score of 75/100 to the weakest submission they evaluate. If all judges were to score all submissions, this discrepancy would not bias the average score. However, it is often preferred to randomly assign a subset of judges (say three out of five) to each submission due to the number of submissions received. Standardizing scores prior to calculating the average score for each submission accounts for this natural variation between judges.

Each judge’s scores are standardized by scaling them to have a mean of 0 and a standard deviation of 1. To do so, the average score is subtracted from the raw score and then divided by the standard deviation. Once the scores are standardized for each judge, the average score for each submission is calculated.

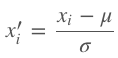

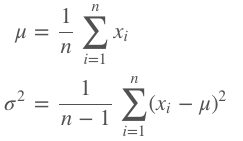

Given raw scores for a single judge of x1, x2,…, xn, with mean (µ) and standard deviation (σ), the standardized scores are computed as

where:

Then, each judge’s standardized scores are combined into an average score for each submission.